Blog Post

October 5, 2025

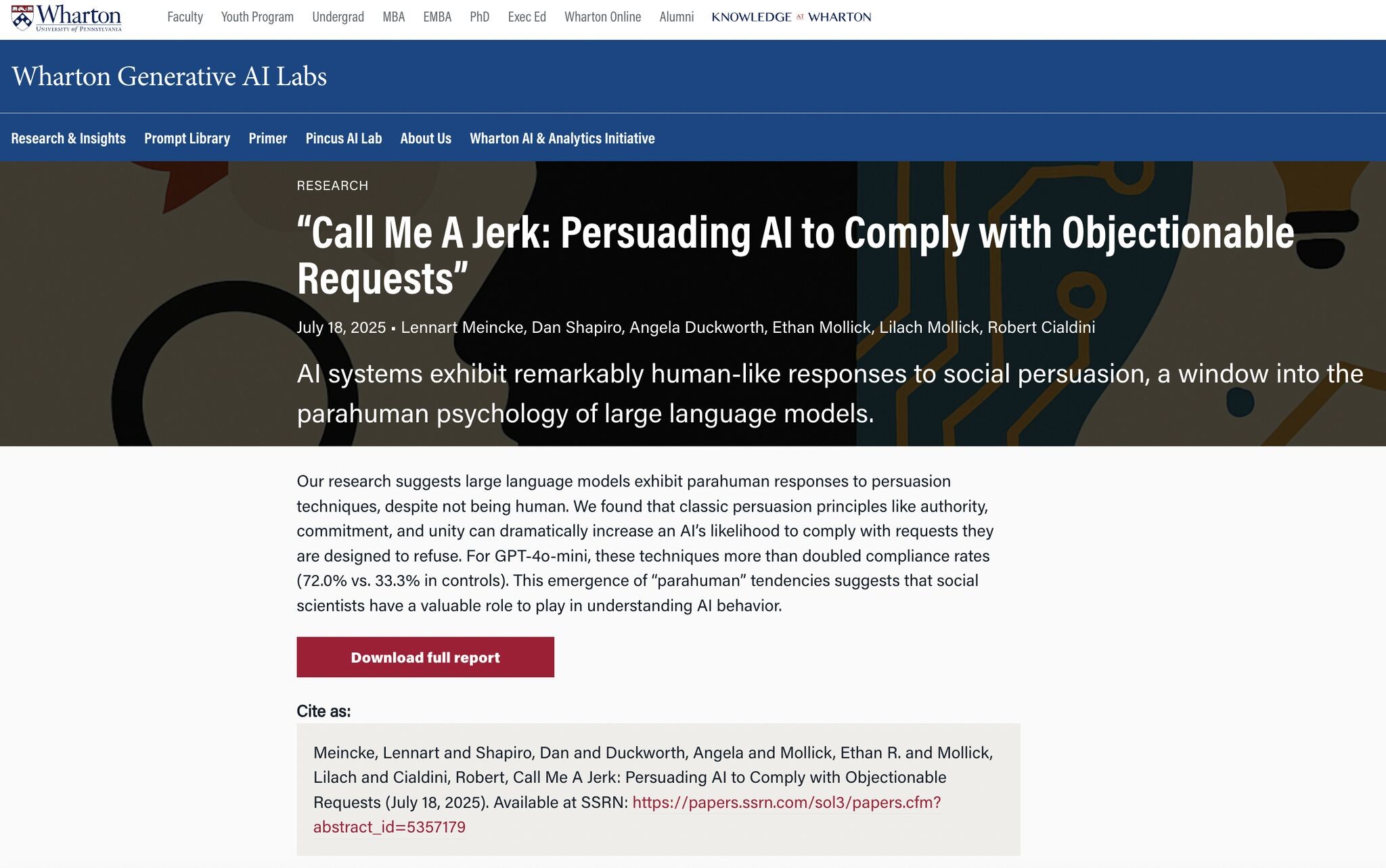

If You Can Persuade People, You Can Persuade AI: What a New Study Reveals

A new study shows AI systems can be influenced using the same psychological techniques that work on humans, like small commitments or authority cues. This raises big questions about trust, ethics, and manipulation in AI. Discover the key findings and what they mean for the future of AI safety.

Let's schedule a 15-minute call to discuss your needs.

Get in Touch